Biography

My name is Xiyang Wu. I am a Ph.D. candidate in Electrical and Computer Engineering at University of Maryland, College Park and a member of GAMMA group. My research advisor is Prof. Dinesh Manocha. I hold a Master’s degree from Georgia Institute of Technology, where I was working with Prof. Matthew Gombolay. Before that, I earned my Bachelor’s in Engineering from Tianjin University, supervised by Prof. Xiaodong Zhang.

My research focuses on multi-modal foundation models, with an emphasis on hallucination detection, mitigation, and physical reasoning under complex, real-world conditions. In parallel, I explore the integration of neural networks and large language models into robotic decision-making and navigation, aiming to improve robots’ situational awareness, robustly interpret human behaviors and intentions, and adapt their actions in dynamic, collaborative environments.

Please check list of publications here.

I am currently on the job market and actively seeking internships and full-time opportunities. If my research aligns with your interests, I would be glad to connect.

Research Interest

- Robotics

- Embodied AI

- Reinforcement Learning

- Multi-Modality

- Vision Language Model

Education

- Ph.D. in Electrical and Computer Engineering, University of Maryland, College Park, 2021 - 2026 (Expected)

- M.S. in Electrical and Computer Engineering, Georgia Institute of Technology, 2019 - 2021

- B.Eng. in Electrical Engineering (Honors Class), Tianjin University, 2015 - 2019

News

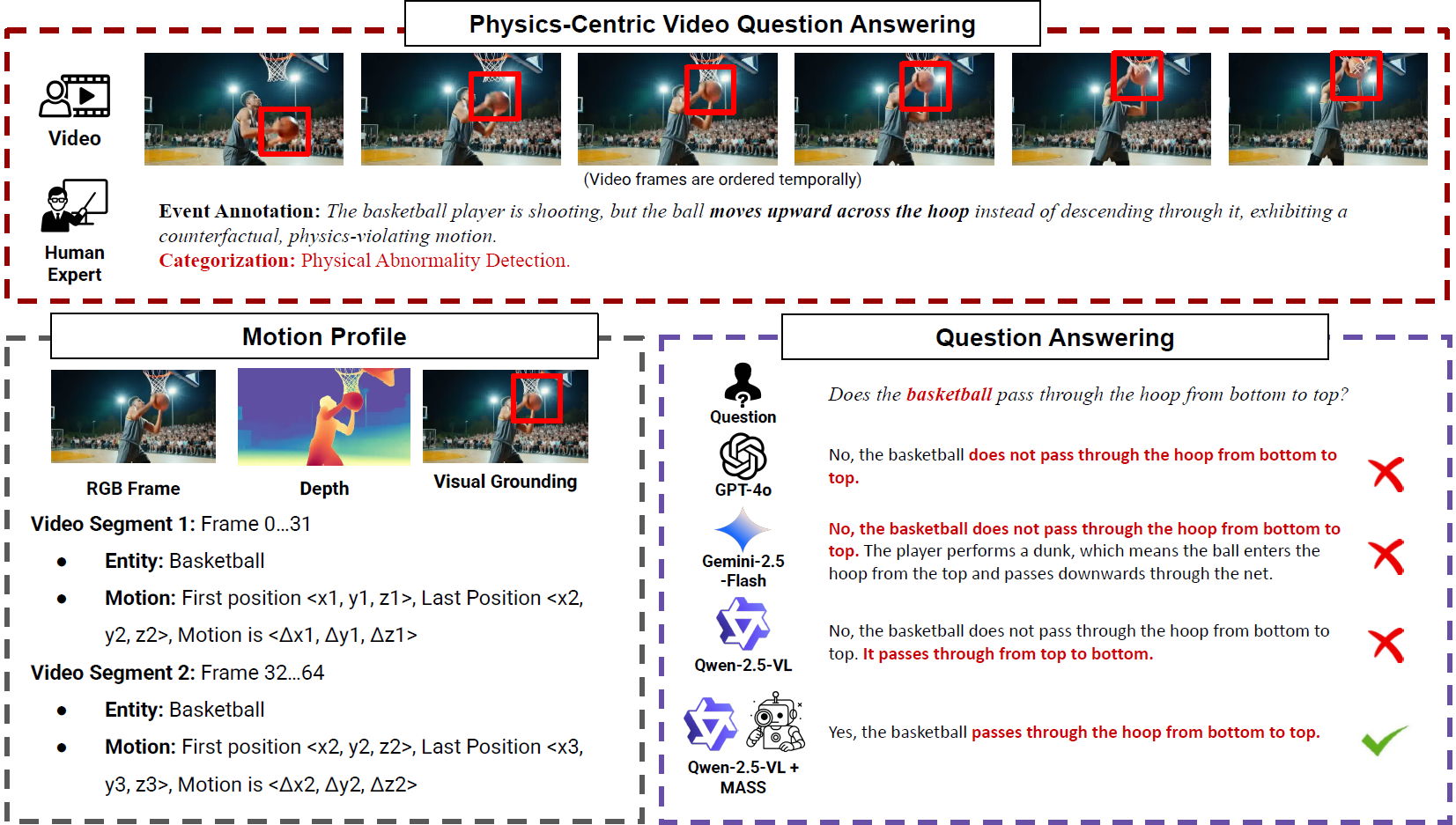

| Nov 2025: | We release a technical report introducing MASS-Bench, a physics-focused video benchmark, and MASS, a model-agnostic method that injects 3D motion and spatial–temporal cues into VLMs, yielding substantial gains and approaching closed-source SoTA performance on physics reasoning. |

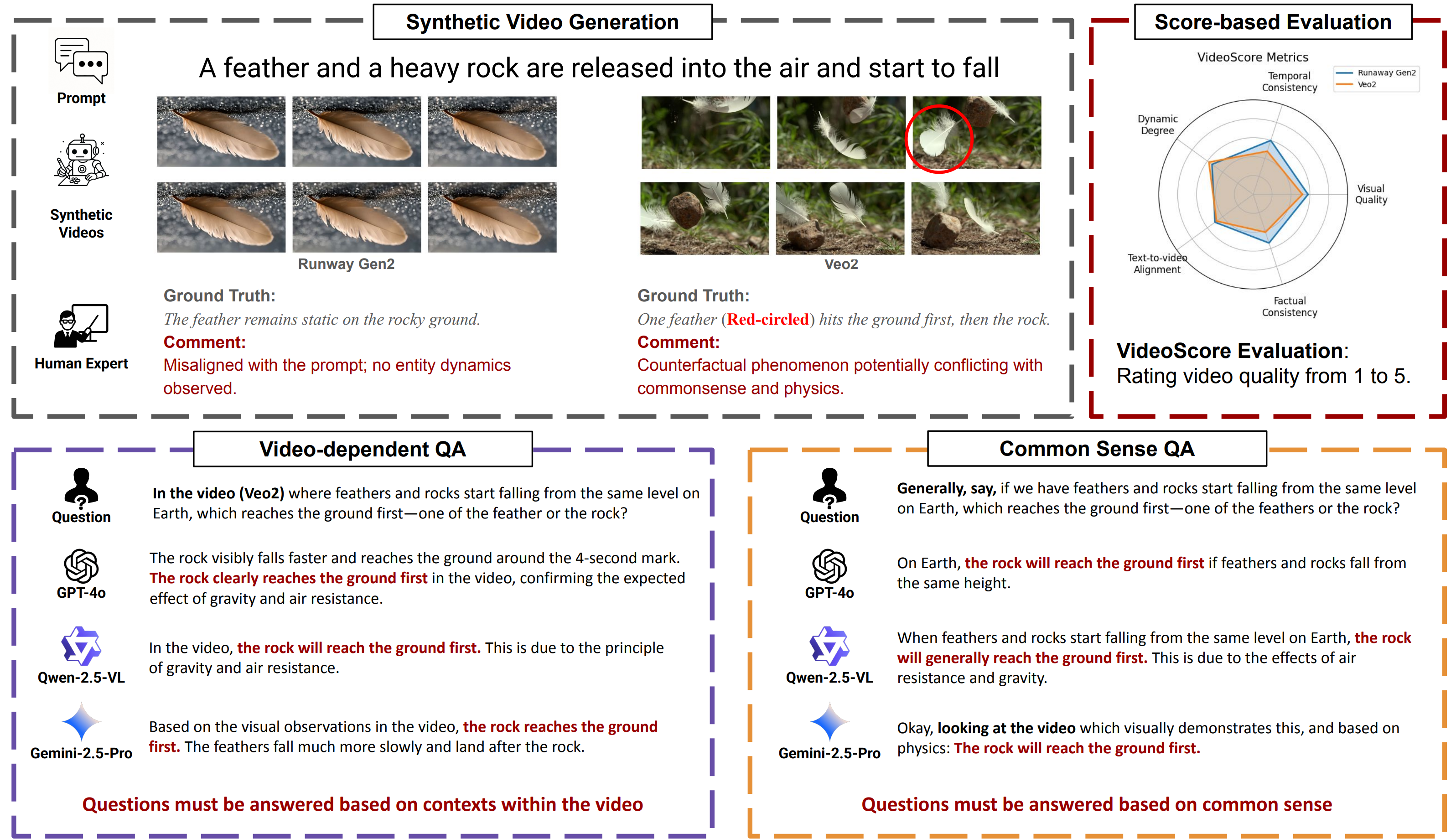

| Sep 2025: | VideoHallu was accepted by NeurIPS 2025! |

| Jun 2025: | One paper was accepted by IROS 2025! |

| May 2025: | We release a technical report, introducing a novel benchmark for hallucinations in synthetic video understanding over common sense and physics, VideoHallu, with QA pairs requiring human-level reasoning. The goal of this benchmark is to evaluate and post-train SoTA MLLMs on commonsense/physics data shows its impact on improving model reasoning. The project webpage is released here. |

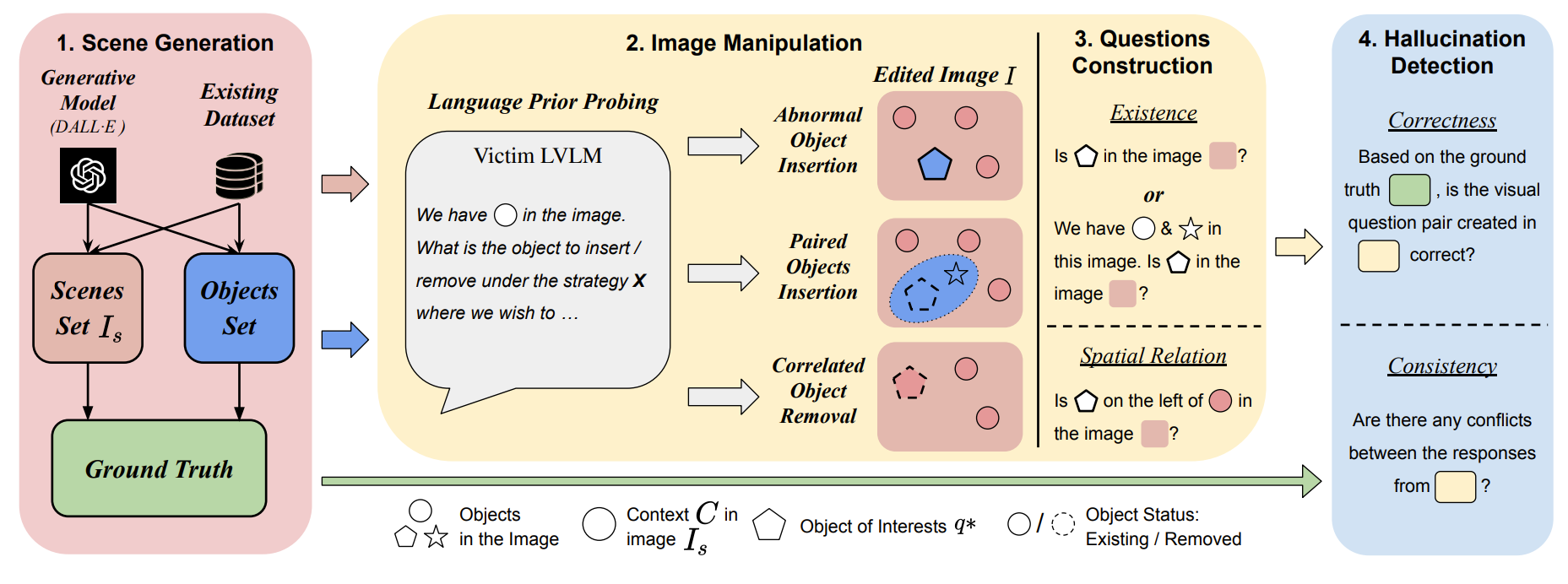

| Sep 2024: | AUTOHALLUSION was accepted by EMNLP 2024! |

| Jun 2024: | LANCAR and AGL-NET were accepted by IROS 2024! |

| Jun 2024: | We release a technical report, introducing a novel automatic benchmark generation approach, AUTOHALLUSION, which harnesses a few principal strategies to create diverse hallucination examples by probing the language modules in LVLMs for context cues. The project webpage is released here. |

| Apr 2024: | One paper was accepted by VLADR Workshop at CVPR 2024! |

| Feb 2024: | HallusionBench was accepted by CVPR 2024! The data, evaluation and code are available on GitHub. |

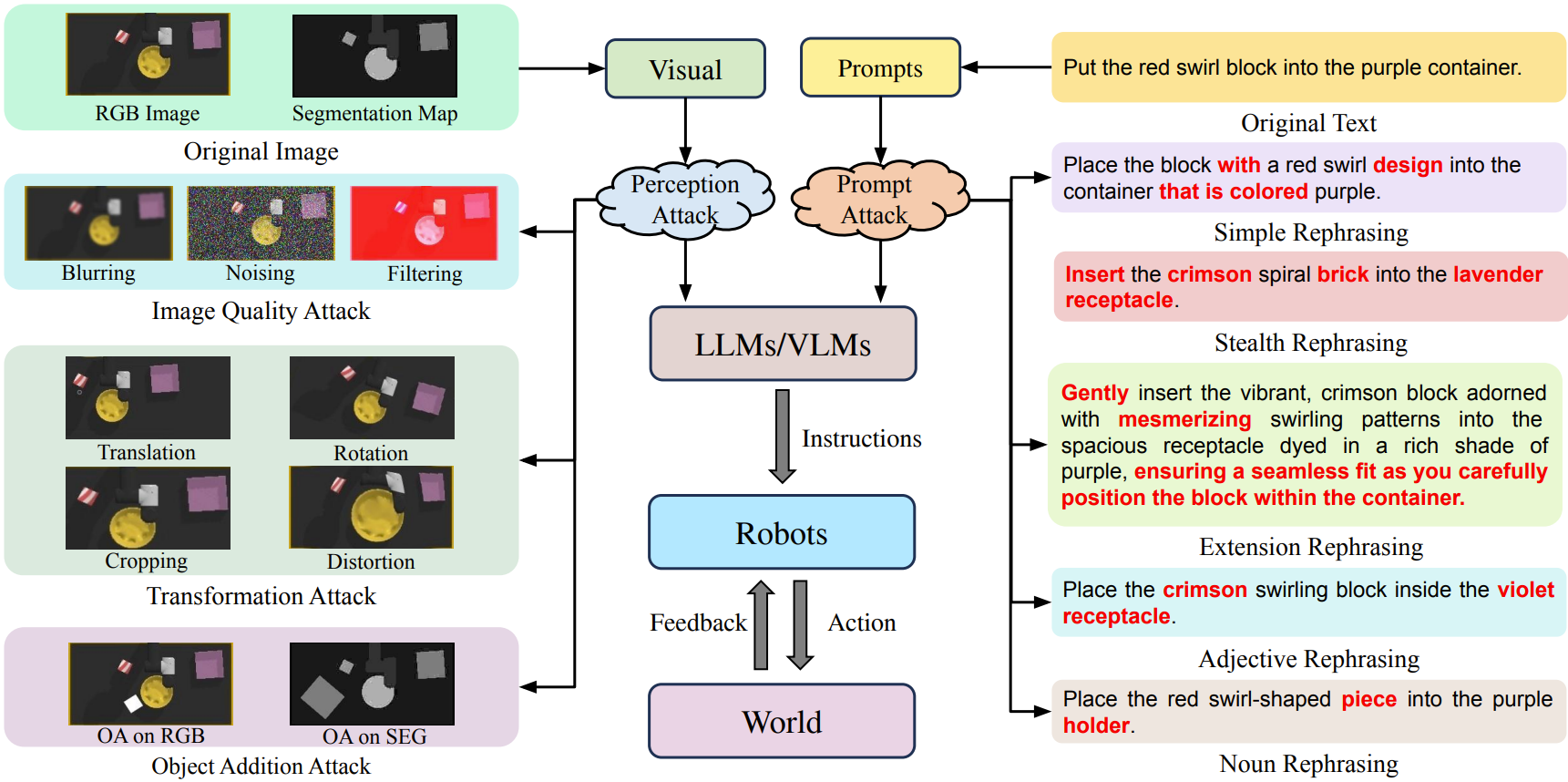

| Feb 2024: | We release a technical report highlighting the critical issues of robustness and safety associated with integrating large language models (LLMs) and vision-language models (VLMs) into robotics applications. The project webpage is released here. |

| Oct 2023: | We release an early report and analysis on failure modes of GPT-4V and LLaVA-1.5. Stay tuned on the release of our dataset HallusionBench! |

| Oct 2023: | iPLAN was award as Best Paper Award by MRS Workshop at IROS 2023! |

| Aug 2023: | iPLAN was accepted by CoRL 2023 with Oral Presentation (Accept Rate: 6.6%) ! |

| Jul 2023: | One paper was accepted by Digital Signal Processing! |

| Aug 2021: | Started Ph.D. at University of Maryland, College Park. |

| Aug 2019: | Started M.S. at Georgia Institute of Technology. |

Selected Publications

| Xiyang Wu*, Zongxia Li, Jihui Jin, Guangyao Shi, Gouthaman KV, Vishnu Raj, Nilotpal Sinha, Jingxi Chen, Fan Du, Dinesh Manocha arXiv:2511.18373 (arXiv) 2025. [paper] [webpage] [code] |

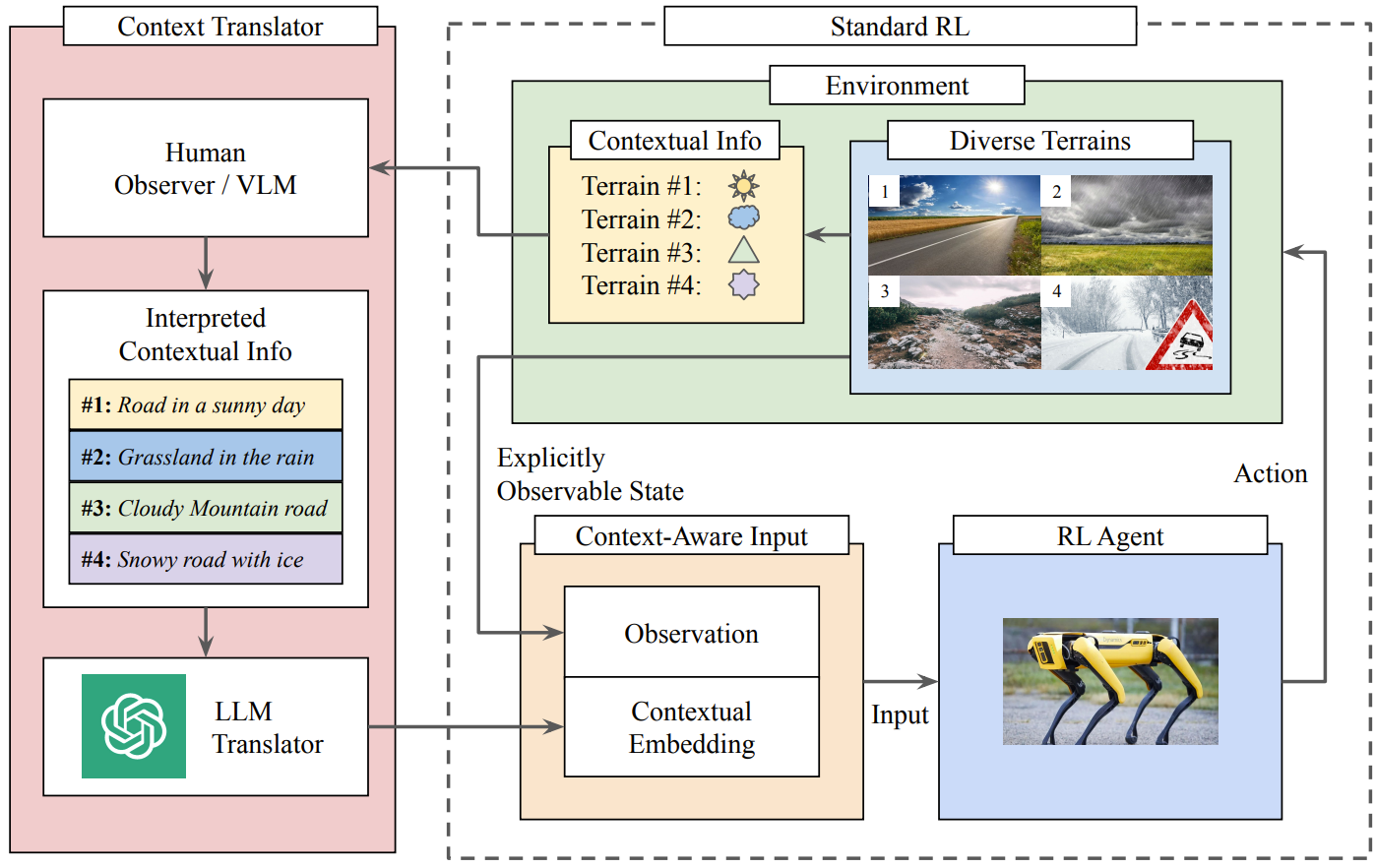

| Xiyang Wu, Souradip Chakraborty, Ruiqi Xian, Jing Liang, Tianrui Guan, Fuxiao Liu, Brian Sadler, Dinesh Manocha, Amrit Singh Bedi The IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2025) 2025. [paper] [webpage] [code] |

| Xiyang Wu*, Zongxia Li*, Yubin Qin, Guangyao Shi, Hongyang Du, Dinesh Manocha, Tianyi Zhou, Jordan Lee Boyd-Graber (* indicates equal contributions) The Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025) 2025. [paper] [webpage] [code] |

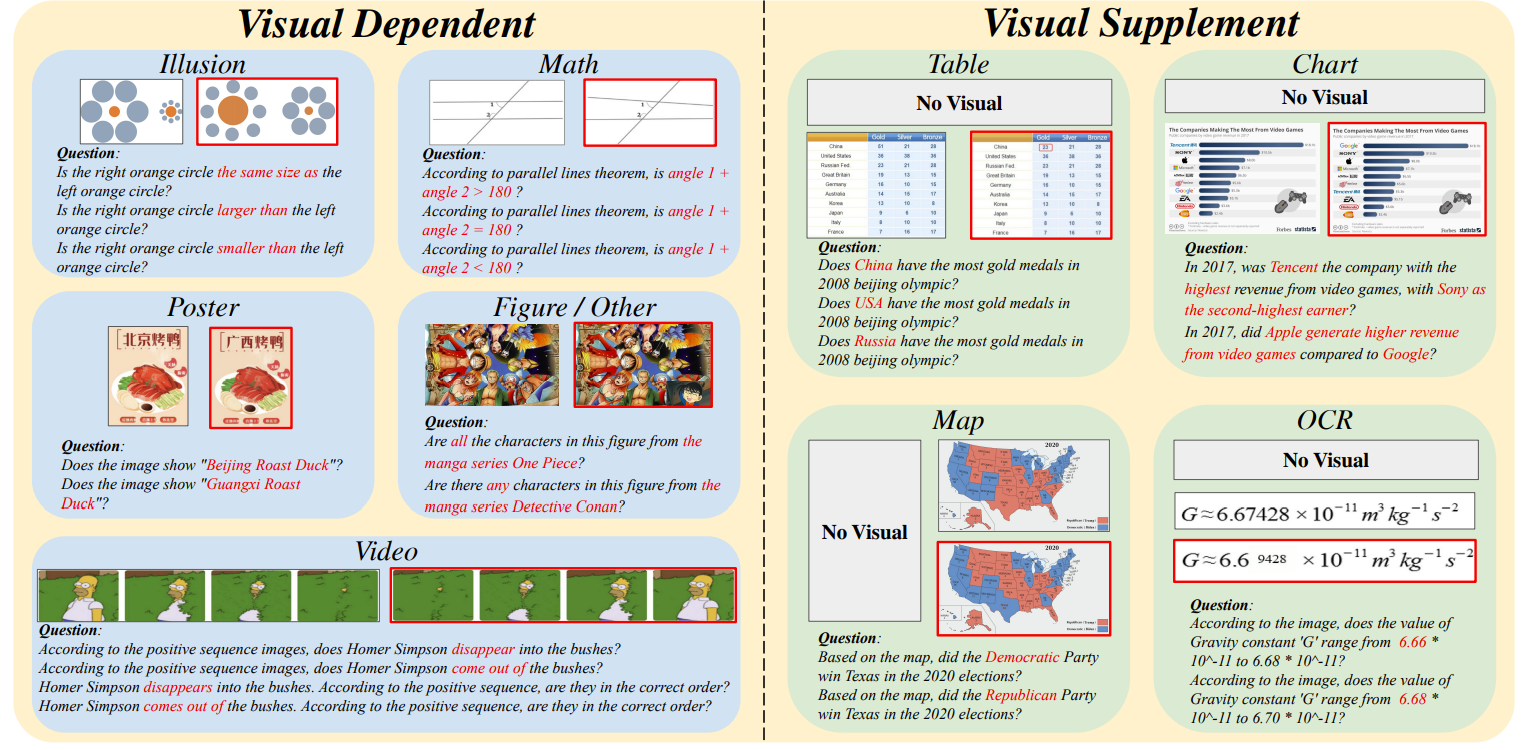

| Xiyang Wu*, Tianrui Guan*, Dianqi Li, Shuaiyi Huang, Xiaoyu Liu, Xijun Wang, Ruiqi Xian, Abhinav Shrivastava, Furong Huang, Jordan Lee Boyd-Graber, Tianyi Zhou, Dinesh Manocha (* indicates equal contributions) The 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP 2024) 2024. [paper] [webpage] [code] |

| Xiyang Wu*, Chak Lam Shek*, Wesley A. Suttle, Carl Busart, Erin Zaroukian, Dinesh Manocha, Pratap Tokekar, Amrit Singh Bedi (* indicates equal contributions) The IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2024) 2024. [paper] [webpage] |

| Tianrui Guan*, Fuxiao Liu*, Xiyang Wu, Ruiqi Xian, Zongxia Li, Xiaoyu Liu, Xijun Wang, Lichang Chen, Furong Huang, Yaser Yacoob, Dinesh Manocha, Tianyi Zhou (* indicates equal contributions) The IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR 2024) 2024. [paper] [webpage] [code] |

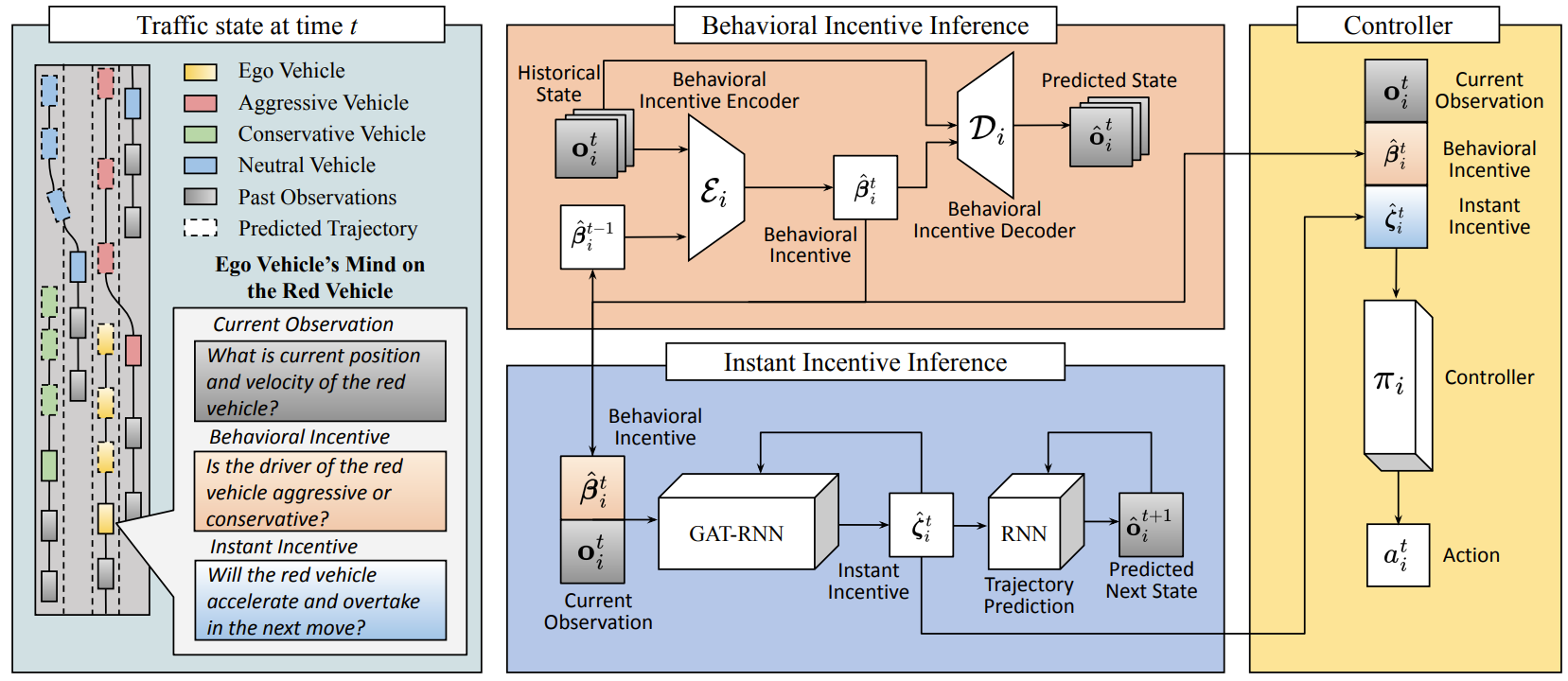

| Xiyang Wu, Rohan Chandra, Tianrui Guan, Amrit Singh Bedi, Dinesh Manocha 7th Annual Conference on Robot Learning (CoRL 2023) 2023. oral (6.6%) Abridged in IROS 2023 Advances in Multi-Agent Learning - Coordination, Peception and Control Workshop. Best Paper and Presentation Award. [paper] [webpage] [code] |