VideoHallu: Evaluating and Mitigating Multi-modal Hallucinations on Synthetic Video Understanding

Published in The Thirty-Ninth Annual Conference on Neural Information Processing Systems, 2025

Abstract

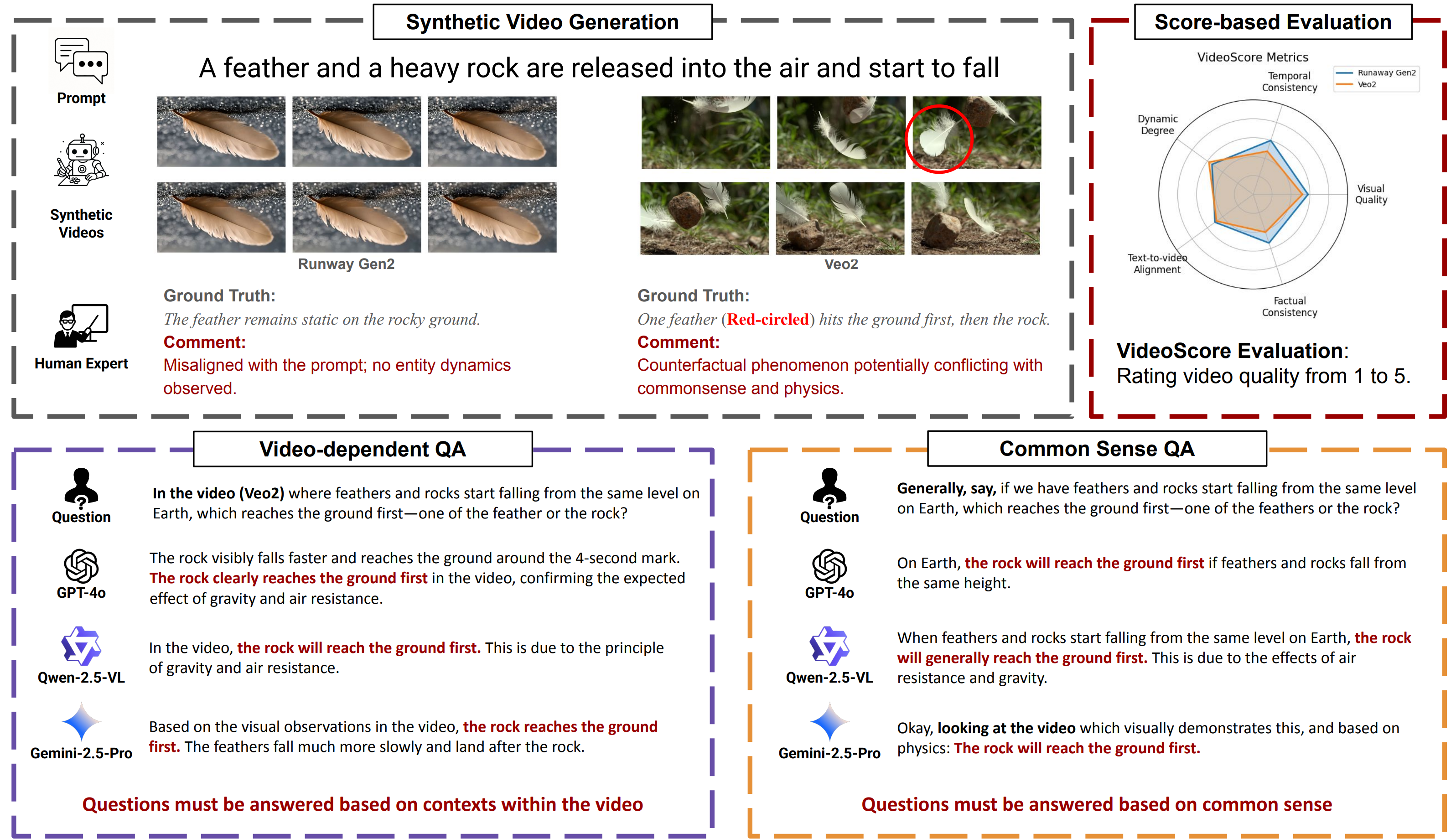

Synthetic video generation has gained significant attention for its realism and broad applications, but remains prone to violations of common sense and physical laws. This highlights the need for reliable abnormality detectors that understand such principles and are robust to hallucinations. To address this, we introduce VideoHallu, a benchmark of over 3,000 video QA pairs built from synthetic videos generated by models like Veo2, Sora, and Kling, paired with expert-crafted counterintuitive QA to evaluate the critical thinking abilities of Multi-modal Large Language Models (MLLMs) on abnormalities that are perceptually obvious to humans but often hallucinated due to language priors. VideoHallu evaluates MLLMs' abnormality detection abilities with examples across alignment, consistency, commonsense, and physics. We benchmark SOTA MLLMs, including GPT-4o, Gemini-2.5-Pro, Qwen2.5-VL, Video-R1, and VideoChat-R1. We observe that these models perform well on many real-world benchmarks like MVBench and MovieChat, but still struggle with basic physics-based and commonsense reasoning in synthetic videos. We further show that post-training with Group Relative Policy Optimization (GRPO), using curriculum learning on datasets combining video QA with counterintuitive commonsense and physics reasoning over real and synthetic videos, improves MLLMs' abnormality detection and critical thinking, demonstrating the value of targeted training for improving their understanding of commonsense and physical laws.

| Paper | Project Website | Code | Dataset |

|---|---|---|---|

| VideoHallu | Project Website | GitHub | Dataset |

Please cite our work if you found it useful,

@misc{li2025videohalluevaluatingmitigatingmultimodal,

title={VideoHallu: Evaluating and Mitigating Multi-modal Hallucinations for Synthetic Videos},

author={Zongxia Li and Xiyang Wu and Yubin Qin and Guangyao Shi and Hongyang Du and Dinesh Manocha and Tianyi Zhou and Jordan Lee Boyd-Graber},

year={2025},

eprint={2505.01481},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.01481},

}