MASS: Motion-Aware Spatial-Temporal Grounding for Physics Reasoning and Comprehension in Vision-Language Models

Published in The IEEE / CVF Computer Vision and Pattern Recognition Conference, 2026

Abstract

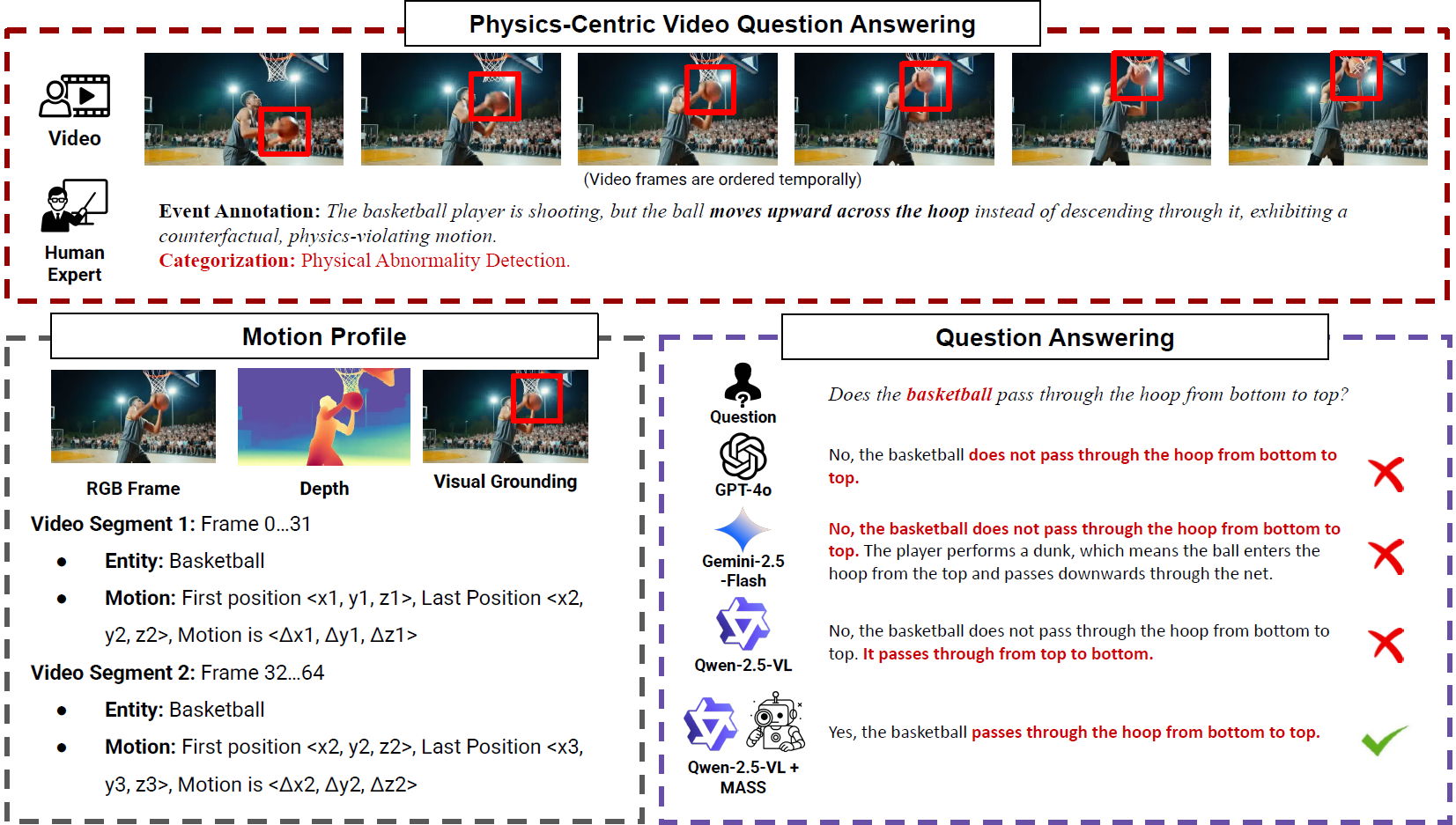

Vision Language Models (VLMs) perform well on standard video tasks but struggle with physics-driven reasoning involving motion dynamics and spatial interactions. This limitation reduces their ability to interpret real or AI-generated content (AIGC) videos and to generate physically consistent content. We present an approach that addresses this gap by translating physical-world context cues into interpretable representations aligned with VLMs' perception, comprehension, and reasoning. We introduce MASS-Bench, a comprehensive benchmark consisting of 4,350 real-world and AIGC videos and 8,361 free-form video question-answering pairs focused on physics-related comprehension tasks, with detailed annotations including visual detections, sub-segment grounding, and full-sequence 3D motion tracking of entities. We further present MASS, a model-agnostic method that injects spatial-temporal signals into the VLM language space via depth-based 3D encoding and visual grounding, coupled with a motion tracker for object dynamics. To strengthen cross-modal alignment and reasoning, we apply reinforcement fine-tuning. Experiments and ablations show that our refined VLMs outperform comparable and larger baselines, as well as prior state-of-the-art models, by 8.7% and 6.0%, achieving performance comparable to close-source SoTA VLMs such as Gemini-2.5-Flash on physics reasoning and comprehension. These results validate the effectiveness of our approach.

| Paper | Project Website | Code | Dataset |

|---|---|---|---|

| MASS | To Be Released | To Be Released | To Be Released |

Please cite our work if you found it useful,

@misc{wu2025massmotionawarespatialtemporalgrounding,

title={MASS: Motion-Aware Spatial-Temporal Grounding for Physics Reasoning and Comprehension in Vision-Language Models},

author={Xiyang Wu and Zongxia Li and Jihui Jin and Guangyao Shi and Gouthaman KV and Vishnu Raj and Nilotpal Sinha and Jingxi Chen and Fan Du and Dinesh Manocha},

year={2025},

eprint={2511.18373},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2511.18373},

}